OUT OF YOUR HEAD – Part 2

The Virtual Audio Processing System (VAPS) was born from the idea that 3-D spatial processing of an audio signal would be much more practical and useful in music and film production if you could have discreet control over various spatial parameters in real time. Recording using a dummy head microphone has its unique uses in productions but once the audio has been recorded, you can no longer make any alterations or changes. In modern multi-track recording, this method of recording has too many restrictions.

For those of you that may be unfamiliar with a dummy head microphone, it is a replica of the human head with a microphone built into each ear. When the recorded audio signal is reproduced through high-quality headphones, the listener perceives a sound image almost identical to the one he would have heard at the recording location of the dummy head (head-related stereophony or binaural).

Using a dummy head, one has to very carefully choose and map out beforehand exactly the environment and the position of the dummy head in relation to the music or audio being recorded. More often than not, the actual musicians or the various sources of audio have to be physically repositioned to sound spatially correct when listening to the playback via headphones. This repositioning can cause many problems. Musicians want to be in a certain proximity to one another to best hear each other. Moving them to satisfy spatial position would not be a priority to them. It takes time to do the physical repositioning, not good when one is paying top dollar for the recording studio time per hour. Sometimes the sounds being recorded cannot be moved and the only alternative is to compromise and find the best position possible for the dummy head.

Another big problem is the acoustics of the environment. If one is just trying to capture a live event from the audience perspective, then the dummy head is a great tool for this. But if one is trying to make a high quality produced CD, bad acoustics can really destroy the recording and therefore the listening experience. Also, the engineer does not have control of the balance of each instrument that independent mic placement can accomplish. Once the recording is made, the EQ and the balance of the individual instruments cannot be changed or altered.

Another aspect of using a dummy head created binaural recording, it is very difficult to edit. Normal stereo recording is basically two dimensional. One only has to consider the X axis coordinates. If the engineer needs to cut and splice an audio file, it is a fairly straight forward procedure. But a binaural recording is three dimensional. In addition to the X axis, it also contains the Y and Z axis coordinates. If there is any change whatsoever in the positioning of the sound elements contained in an audio scene, edits can become very noticeable. For example, maybe an edit for sound elements in the X axis will sound fine but it is very easy to tell any change in the Y and Z coordinates.

I have experimented in using a dummy head for multi-track overdubbing. There are problems with this procedure too. Usually for the overdubbed audio tracks to blend properly, the acoustic properties of the environment are very important. If the engineer does not change the position of the dummy head, then the tracks will blend together fine. There is a caveat though. Each track you record has included another instantiation of the acoustic reverberance of the room. After recording just a few tracks, this reverberance builds up to an unnatural level very quickly. If the engineer uses a recording booth with very little reverberance, he can combine more tracks together without this problem.

But recording with very little ambience or reverb causes another problem. Recording binaurally in a space with no reverb or ambience, the spatial quality (i.e. dimensional attributes) is compromised. The reverberant field is very important for our perception of distance. In an anechoic environment (no ambience or reverberation). It is very difficult for our hearing system to determine distance.

As you can see, there are many limitations to using a binaural dummy head microphone. As I mentioned above, if the engineer wants to capture a live audio event as it really sounds to a person in the audience, a virtual audio experience, then the dummy head is a great choice and the realism of “you are there” can be quite extraordinary.

Use of a dummy head microphone does not lend itself well to modern multi-recording techniques. For spacial audio to become mainstream, it has to work in all kinds of situations without becoming problematic. The engineer needs to control the position of a sound in the X, Y, Z axis independently of the room acoustics. The room acoustics also must be head-related (three-dimensional). The sound sources need to change position over time. The acoustics must be able to change in real time as well. This task clearly is something that could be done by a computer.

I was highly motivated by this concept, I went to work and in 1991, I assembled the first version of the Virtual Audio Processing System (VAPS). The basic components of the VAPS had to include the following:

- Algorithms that modeled the human hearing. These basic measurements are called Head Related Transfer Functions (HRTF).

- Hardware DSPs that can process the HRTFs in real time.

- Graphic software interface for controlling the positioning of the audio in 3-space.

- Hardware interface for controlling the positioning of the audio in 3-space.

- Analog to Digital converter (ADC) and Digital to analog converter (DAC) to get the audio into and out of the computer.

- Hardware DSPs that can process the room acoustics in real time.

- High quality speaker and headphone playback systems.

- A host computer to control and organize all the functions listed above.

Head Related Transfer Function

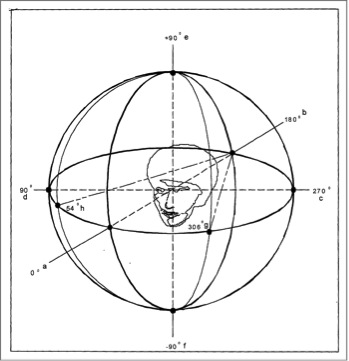

Generating spatial sound and playing it through headphones is a demanding task, since two important factors, ILD – inter-aural level difference and ITD – inter-aural time difference, need to be taken into consideration. Basically, the problem can be solved by the use of head related transfer functions (HRTF), which represent a set of empirically measured functions, one for each spatial direction. The complete reconstruction of HRTF is possible through the use of finite impulse response (FIR) filters with 512 coefficients each.

The measurement comprises taking an impulse response measurement of the human head and shoulders in an anechoic environment. An array of speakers is set up in both the horizontal and vertical axis around the person to be measured. Tiny probe microphones are inserted into the ear of the person. A short noise impulse burst covering the full human hearing frequency spectrum is played through a speaker and recorded via the probe microphones. The physical nature of the ear, the head and the shoulders naturally filter the noise. The difference between the original impulse and the filtered recorded impulse is computed (convolution). This measurement is then used to create a finite impulse response filter (FIR). This filter is created using specialized audio DSPs. When a normal audio signal is run through this filter, it is turned into a head related audio signal, which depending on the actual measurement, places the sound in a specific location. Then the hard part, to be able to switch seamlessly and quickly between measurements to simulate a sound moving through 3-space. This process is compute intensive.

Audio DSPs

The process of creating an FIR requires a considerable amount of computer power. The creation of multiple channels of FIR was not possible in 1991 so this number crunching had to be done using specialized audio DSPs and controlled by a host computer. I called this the location processor.

Another area that generates a tremendous amount of calculation is real time room simulation (ambience and reverb). Just the algorithms that create a natural and real acoustic room simulation is very demanding on computer power so those calculations need to be also computed by audio DSPs. Then, these room simulations also need to be spatialized. The various parameters of the room simulator then need to be controlled by the host computer.

In 1991, real-time convolution reverbs did not exist. The room simulator DSPs I chose for the job were in a self contained single rack space unit. It was called the Quantic QRX-XL Room Simulator. An amazing sounding reverb processor. I used four of these in a rack for the VAPS.

Graphic Interface

The VAPS needed to have a software interface that was easy to see and easy to control the various parameters of both the sound source direction and the room simulation. A graphic interface for this type of application can be extremely complex. The first incarnation of the VAPS was very crude in this regard. But it was simple and understandable for the engineer to operate.

Hardware interface

The standard keyboard and mouse are required but to control the sound positioning in a more intuitive manner in real time required an additional controller. In 1991, various controllers for controlling the X, Y, and Z axis were rare if at all. What I ended up using in the first VAPS was a trackball to move the sound around in 3-space.

Audio Interface

The quality of ADCs and DACs in 1991 were limited. I wanted the best converters I could get at the time so after careful research I ended up using some of the very first Apogee converters. These sounded very good for that time. The ADCs were stereo and I used two. They could be synchronized using word clock. The original VAPS was a four channel system. In other words, it could spatially process four tracks of audio in real time. The DAC was also Apogee.

The output of the first VAPS was stereo. There was no ability to output the four individual spatially processed tracks. Actually, once a mono audio track is spatially processed, it becomes head-related. Head-related means the minimum requirement for it to be three-dimensional is that it requires two channels. Our ears hear in 360 degrees using just our two ears. Once an HRTF has been applied to a mono audio track, it now becomes a head-related stereo track. So if multiple channel DACs had been available in 1991, it would have had to been an eight channel DAC. Considering the way the first VAPS processed audio, having just stereo outputs was not a problem. I will comeback to this topic in more detail later.

Speakers and Headphones.

My search for the best speakers and headphones was quite exhaustive at that time. For speakers, the requirement is they had to be very phase coherent. They needed to be as close to a point source as possible. The frequency response had to be very flat. The amplifiers also had to be top notch. I ended up using the Meyer HD-1 near field monitor. The amplifier is built into the speaker. I used four of these.

The Headphone system consisted of a custom built class A amplifier from Head Acoustics from Germany driving the SR-Lambda Pro Headphone. The amplifier was capable of driving four Stax SR Lambda Pro headphones.

Computers in 1991 were still very crude compared to today’s standards. The first design of the VAPS required two host computers. My choice was a custom built DOS computer to run the spatializing DSPs and a MacIIFX for hosting the Room Simulators.

Host Computers

Although there were many incarnations, here is a basic block diagram of the original VAPS.

The original VAPS as shown in the diagram used four speakers, two headphone amps and up to six headphones. It also allowed the option of using up to two dummy head microphones. The system had two video monitors, one for the PC for controlling the location processors and one for the Mac, which controlled the room simulators. The ADC and DAC where located in the VAPS processing unit rack. All signal routing was done inside the VAPS Control Unit rack. It could spatially process four channels in real time. The cost of this first VAPS was about $200,000 not including labor!

Next month, I will discuss how the VAPS worked and the many ways it could be configured depending on the application.

See you next month here at the Event Horizon!

You can check out my various activities at these links:

http://transformation.ishwish.net

http://currelleffect.ishwish.net

http://www.audiocybernetics.com

http://ishwish.blog131.fc2.com

http://magnatune.com/artists/ishwish

Or…just type my name Christopher Currell into your browser.

Current Headphone System: Woo Audio WES amplifier with all available options, Headamp Blue Hawaii with ALPS RK50, two Stax SR-009 electrostatic headphones, Antelope Audio Zodiac+mastering DAC with Voltikus PSU, PS Audio PerfectWave P3 Power Plant. Also Wireworld USB cable and custom audio cable by Woo Audio. MacMini audio server with iPad wireless interface.

Or…just type my name Christopher Currell into your browser.

Current Headphone System: Woo Audio WES amplifier with all available options, Headamp Blue Hawaii with ALPS RK50, two Stax SR-009 electrostatic headphones, Antelope Audio Zodiac+mastering DAC with Voltikus PSU, PS Audio PerfectWave P3 Power Plant. Also Wireworld USB cable and custom audio cable by Woo Audio. MacMini audio server with iPad wireless interface.

Reply

Want to join discussion?

Feel free to contribute!